The Double-Edged Sword of Model Complexity

Image credit: Photo by Google DeepMind from Pexels

Image credit: Photo by Google DeepMind from Pexels

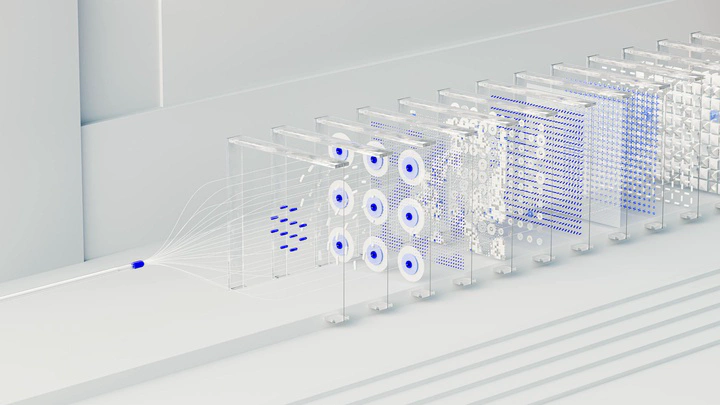

Model Size Obsession: Learning Capacity without Limits

AI pursues ever-larger models.

The AI community now chases billions of parameters for marginal accuracy gains. This drives breakthroughs—and introduces new risks.

High-Learning capacity models deliver power and peril.

Interpretability Crisis: The Human Blind Spot

Complex models, like trillion-parameter neural networks, defy human interpretability [3].

Interpretability loss — we cannot trace every decision.

- This opacity breeds mistrust and hinders debugging.

Inside the Black Box: Unpredictable Behavior

High-capacity models usually achieve record-breaking accuracy [4] on benchmarks by learning very intricate patterns. Yet scaling comes at a price: unpredictable behavior.

Leaning heavily on accuracy, we surrendered control—leaving us with powerful but unpredictable models.

Hidden Shortcuts — models latch onto spurious cues.

- For example, a medical imaging model seemed adept at diagnosing pneumonia but was actually reading hospital metal tokens on X-rays instead of the actual lung tissue—an unrelated cue tied to case prevalence. [1]

Emergence — models leap to new abilities. They also leap to new failures.

- For example, GPT-4 (2023) is orders of magnitude larger than its predecessors and can solve tasks – like multi-step arithmetic or nuanced reasoning – that stumped smaller models. Researchers have noted that as models get bigger, they often develop new abilities in abrupt leaps. [4] These are called emergent behaviors. Yet this emergent phenomenon can also trigger harmful, unexpected failures, making it harder to foresee what a larger model might learn or do.

Scaling complexity inevitably multiplies unknowns, inviting sudden and potentially harmful outcomes.

Testing Falls Short: Coverage and Corner Cases

Verifying robust behavior in these complex models is an overwhelming task.

Coverage metrics deceive — neuron coverage metrics are inadequate.

- Traditional neuron coverage mimics code coverage by activating network neurons to probe behaviors. But studies [2] have shown that high neuron coverage often fails to reveal critical flaws.

Corner cases slip through — models react unpredictably to slight input variations.

- Neural networks face infinite input possibilities. Minor, imperceptible perturbations can flip predictions entirely. Ensuring robustness against all such corner cases remains an open challenge.

Hard to test, hard to trust. How do we harness complexity while managing its risks?

- Domain-aware verification offers the solution.

Keeping Complex Models in Check with Domain-Aware Verification

Domain knowledge powers realistic test cases. Instead of brute-forcing weight analysis, we simulate domain-specific scenarios. This shifts from statistical performance and neuron coverage to semantic coverage of critical behaviors.

By capturing expert insights into explicit domain rules, we specify how the model should—and shouldn’t—behave. This black-box approach checks model outputs against requirements, no weight inspection needed.

Yet statistical validation of behavior consistency remains hard. Limited labeled data makes it tough to assess coverage across all behaviors.

VerifIA tackles this with population-based metaheuristics. It generates synthetic, constraint-feasible samples to stress-test model brittleness. This simulates real-world variation beyond limited data. For example, in loan approval, VerifIA synthesizes profiles with higher incomes while holding other traits constant. It then checks that default risk declines—automating rule-based testing for “income ↑ ⇒ default risk ↓.”

By embedding domain rules and crafting targeted tests, VerifIA ensures complex models behave predictably in critical scenarios—delivering domain-aligned assurance.

References

- Steinmann et al., Navigating Shortcuts, Spurious Correlations, and Confounders: From Origins via Detection to Mitigation, Arxiv

- Yuan et al., Revisiting Neuron Coverage for DNN Testing: A Layer-Wise and Distribution-Aware Criterion, Arxiv

- Christi Lee, Explainable AI: Striking the Balance Between Accuracy and Interpretability, Day 7/30, Medium

- Veronika Samborska, Scaling up: how increasing inputs has made artificial intelligence more capable, Ourworldindata